When companies begin their search for a data historian or a time series database for storing large amounts of process data, they often evaluate solutions on their features and offerings. Rather than ask the correct questions, they mistakenly structure their RFIs and requirement lists around these three areas:

Avoid These Costly Mistakes When Choosing Your Enterprise Data Historian

Jul 19, 2022 9:04:41 AM / by Jeff Knepper posted in Data Historian

The Difference Between Historians...Data, Process, Site, Enterprise and Cloud

Nov 16, 2021 3:25:31 PM / by Jeff Knepper posted in feature

If you have spent any time researching industrial databases, you have likely run into several different labels for historians. So what’s the difference between them, and most importantly, which one do you need to get the job done?

Canary today announced it has become the historian solution of choice for The Smart Factory @ Wichita, a new Industry 4.0 immersive experience center by Deloitte.

Replacing the CygNet Historian for Better Data Access

Feb 24, 2021 1:18:56 PM / by Jeff Knepper

Organizations using the CygNet SCADA platform struggle with data access and reporting. The included CygNet VHS (Value History Service) historian is not optimized for performance or data retrieval; accessing data from the archive is difficult and cumbersome. A lack of modern reporting and trending tools results in poor data visualization. Overall, the historian solution is not capable as an enterprise solution and should not be used as such.

G5 Consulting Demonstrates MQTT Sparkplug With Canary

Jan 25, 2021 10:53:30 AM / by Jeff Knepper posted in MQTT

Interested in leveraging Ignition, Canary, and MQTT Sparkplug? No problem! Out of the box ready with simple setup. Take a look as Dave Schultz from G5 Consulting, a Canary Partner, shows just how easy it can be to move data from the edge to the enterprise using MQTT Sparkplug and Canary.

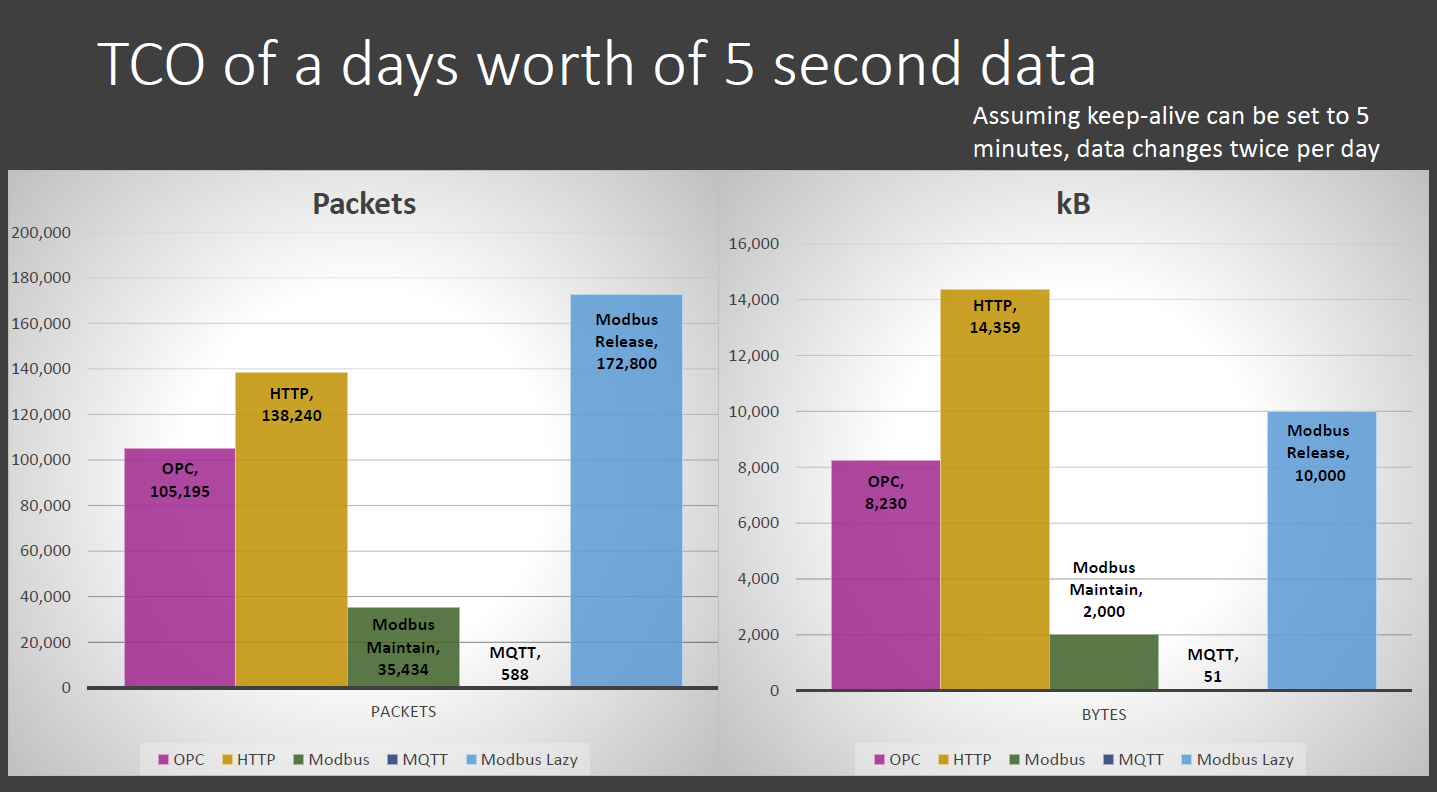

MQTT Sparkplug: Edge driven, report by exception, best-in-class IIoT.

Jan 22, 2021 11:26:00 AM / by Jeff Knepper posted in Canary Collectors

If you would like a better understanding of MQTT, or are having a tough time wrapping your head around what is Sparkplug and how does it relate to MQTT, this post is for you!

Understanding How To Calculate Historian Disk Space

Sep 1, 2020 10:19:39 AM / by Jeff Knepper posted in Canary Historian

"What type of disk space will the Canary Historian need?" is a common question (and a valid one!) but not necessarily as simple as it might sound....

Enterprise Data Historian Pricing Comparison for AVEVA PI, Ignition, and Canary

Apr 22, 2020 11:24:44 PM / by Jeff Knepper posted in Canary Historian

Data historians are key to an organization understanding their process, but some clients are worried about purchasing data historians due to cost. This guide will price popular historian options like AVEVA PI, Canary, and Ignition by Inductive Automation. Decide which data historian price is the best value.

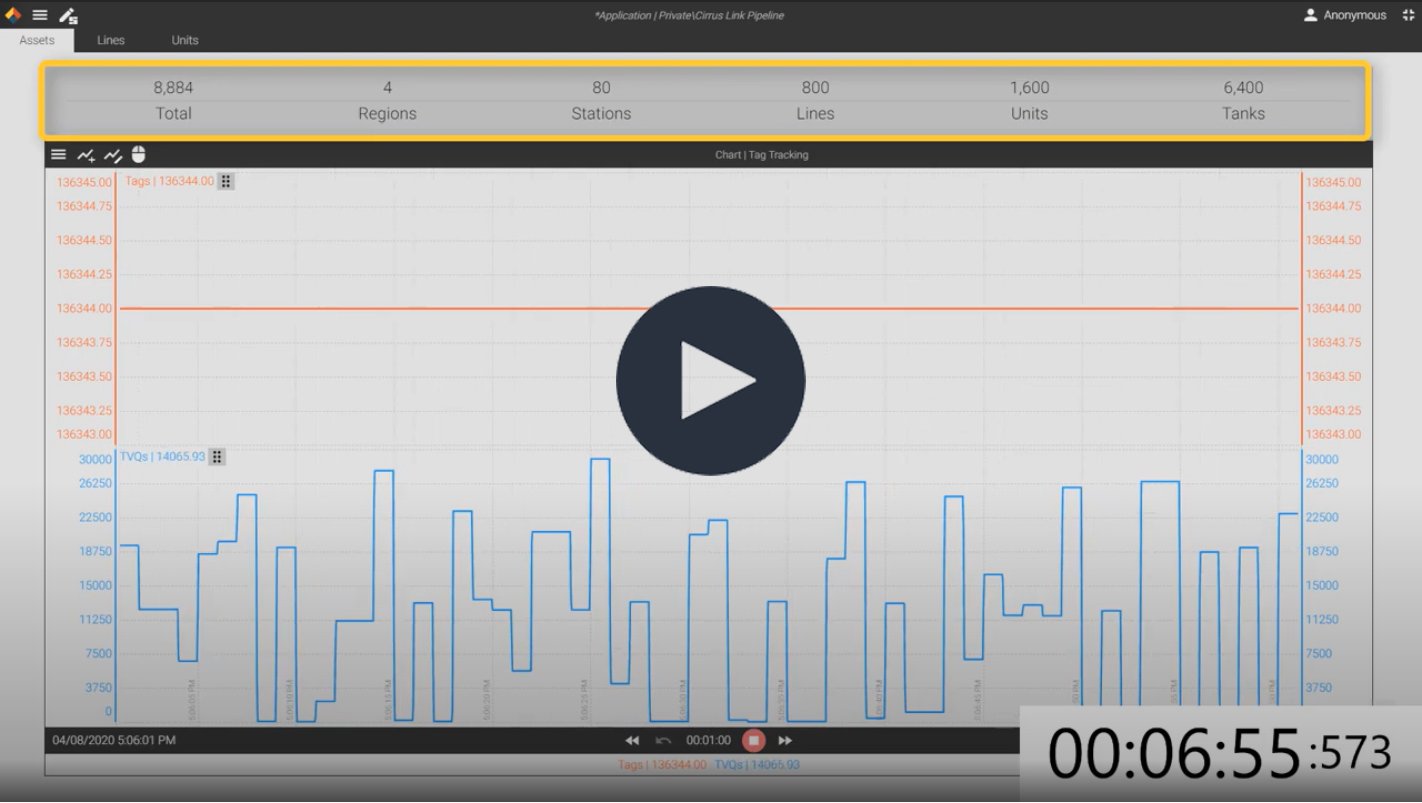

Automatically Discover New MQTT Tags and Group By Asset

Apr 20, 2020 2:12:25 PM / by Jeff Knepper posted in industry 4.0, Canary Historian, Canary Collectors, MQTT

Some organizations constantly add new tags and assets to their operation. If this is true for you, it is likely you or your team are spending too much time manually adding tags and assigning them to your asset model.

Watch the video below to see how easy it could be!

Understanding Your Data Historian Choices and Options

Apr 6, 2020 5:08:30 PM / by Jeff Knepper posted in Canary Historian, feature

More than ever, companies are understanding the importance, and necessity, of the data historian. With so many database options available to you, it can be a bit confusing to work through your options. This guide should make it easier to understand the choices you have when selecting a data historian for your industrial application.